As the sands shift around digital marketing, says Mike Wickham of Impression, it might be time to reconsider how we target customers online.

Good marketing should always be a win-win. The consumer should win because they’re being provided with a relevant option for whatever it is they’re in the market for. The brand should win by meeting that need and by providing its product or service to the right audience, hopefully, at the right cost.

As someone who navigates both the world of marketing and consumerism, I’m noticing a worrying trend towards fewer, less relevant options presented across paid media platforms.

The algorithm isn’t always our friend

Let me give you an example. I was recently on a quest to find the perfect pair of shoes. Versatile enough for all seasons, suitable for both smart and casual attire, and durable for years to come. Alas, I’m still searching, and not just because I’m incredibly fussy.

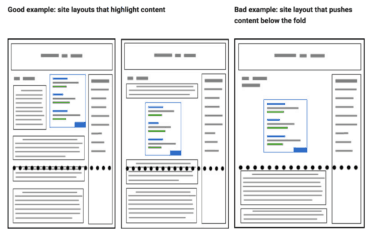

My customer journey began the same as most, with a broad search on Google, and I was served a range of options from boots to sandals. Not quite right, but after navigating to the shopping tab, I found a few items closer to what I was picturing in my head.

After clicking on a few options from different brands and browsing their catalogues I still hadn’t found the dream pair, but I had at least narrowed down the style I was looking for. So I returned to Google and provided a bit more detail for my next search (long-tail searches do still exist), only to receive virtually the same list of items in the carousel as before.

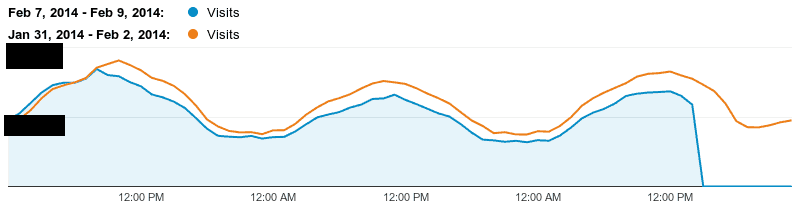

The results were pretty much exclusively from the three brands that I just visited. For the following days and weeks, browsing across the web provided me with limited new suggestions. I was re-served the same items time and time again. A poor use of frequency capping is partly at fault here, but the crux of it is, my behaviour gave signals that I was interested in these items, and so the algorithms pushed hell for leather to get me to convert.

I sympathize with these brands, and advertisers in general, who face similar challenges. With a shift towards larger audience definitions and a heavier reliance on machine automation, they’re a little at the mercy of the algorithms to distinguish who is the right customer.

How to identify the most likely customers

So what can we do to help differentiate between a person who clicks a visual ad of a product, engages with the website and decides the product isn’t quite right for them, versus a person who clicks a visual ad of the product, engages with the website and then decides that while they most likely will buy, they first want to compare prices elsewhere and wait for payday?

It ultimately comes down to developing a better understanding of the behaviour and psychology of your consumers. There are often more reasons not to buy something than there are to buy it, so we must begin to dig much deeper.

It starts with research. Understanding consumer behaviour to uncover the ’why’ behind the engagement – as well as the ’why not’. Is it to do with affordability, a lack of urgency, or too much choice? Or is it down to concerns over compromise, distraction, likeability, trust, principles, ethics… and so much more? The list of conscious and subconscious reasons for not proceeding can be many and varied.

Behavioural insight often starts with old-fashioned methods, like actually talking to people. Focus groups, surveys and questionnaires are often seen as archaic to digital-first businesses, but they will provide the insights that will help you identify where to begin looking within the data.

Don’t chase every would-be buyer

We have to measure in different ways than before. Parsing small but significant signals of consumer intent, such as attention mapping, engagement depth, dwell time, and frequency of interaction, will help to build a clearer picture between a genuinely interested buyer and a passer-by.

By identifying and excluding those who have shown signals of dis-intent, we’re able to better place our energy into more qualified customers, while the same data informs how we adapt our customer journeys to capitalize on the ‘likely buyers’.

We ultimately need to be better at understanding our customers’ wants and needs. And a key part of this is knowing when to pursue them, and when to let them go. Algorithms have made it harder to do the latter, as they miss the context and the cognitive reasoning in the mind of the decision-maker.

Those are the gaps we need to fill, and it’s the combination of blending behavioural insights with your machine learning tools that will not only help the marketer become more effective with their advertising spend, but also help bring back the relevancy to the consumer.

Like I said – win, win.

Feature Image Credit: Remy Gieling via Unsplash