One of the earliest turning points in personal branding, one that made career-minded professionals understand that they’re responsible for their careers and the visibility that shapes them, was the launch of LinkedIn in 2003. Since then, career visibility has followed a simple rule: polish your resume, keep your LinkedIn profile current and compelling, and show up to meetings awake. But that rule no longer holds, thanks to AI.

In The Age Of AI, Career Visibility Works Differently

Although we’ve all become skilled Googlers, search is no longer a simple query-and-results experience. It’s increasingly AI-assisted. People are asking Google, other search engines, and AI platforms questions like “Who’s the leading expert in storytelling?” or “Identify people who understand video production and graphic design.” This shift is often referred to as Generative Engine Optimization, or GEO. Unlike traditional SEO, which focuses on ranking pages, GEO is about making your expertise easy for AI systems to understand, trust, and recommend. But AI tools and the AI summaries that appear atop Google searches just don’t have access to your LinkedIn profile. That means the hard work you did to make it reflect who you are and what makes you exceptional no longer delivers the same visibility it once did.

At the start of the personal branding boom, I recommended that professionals have a brief website/blog to showcase their expertise. LinkedIn, at the time, was rudimentary in what it offered, and with a website you had total control of how you tell the world about yourself. Over the years, though, LinkedIn has added features that made your profile a near equivalent of having your own home on the web. The customizable banner, the Featured section that allows you to use multimedia to highlight your brilliance, and the ability to include long-form content to showcase your thought leadership are just a few of the many enhancements LinkedIn has made over the past two decades. But, because LinkedIn is a mostly closed ecosystem, accessing much of its content requires authentication. That means AI systems have limited crawl access, limited visibility of content that is public, and may not be able to attribute content you created to you. That’s a major personal branding challenge.

What AI Search Changes About Personal Branding

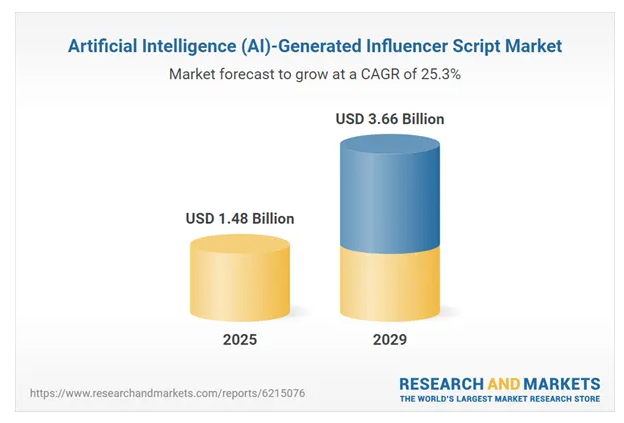

If you don’t own a piece of the internet that AI can actually read, you’re invisible to a growing share of opportunities. When an AI Overview is present, the average click-through rate for top-ranking organic links can drop by 34.5% to nearly 50%, according to Pew Research Center. People are relying on the AI summaries to answer their questions. That’s why a personal website is once again valuable, now, as your AI-readable career home base. AI systems favour:

- Open, crawlable content

- Clear authorship

- Consistent themes across pages

- Signs of expertise over time

AI looks for structure, clarity, and patterns. Different audience, different rules. And the impact on your career can be serious. If AI can’t see you, it can’t recommend you.

What A Personal Website Does That LinkedIn Can’t

Having your own website puts you in control of three things that AI cares deeply about.

- Context. You can explain not just what you do, but why you do it, who you help, and how you think.

- Depth. AI favours original thinking. Articles, insights, frameworks, and your unique point of view matter more than job titles.

- Ownership. Your site is stable. Platforms and algorithms come and go. Headlines change. Your site is the one place your story doesn’t get rearranged by someone else’s design team.

How AI Actually Finds People

AI tools don’t search the way you do. They synthesize. They look for:

- Repeated themes across content

- Clear positioning language

- Specific problems you solve

- Evidence you’ve been thinking about this for a while

They reward clarity over cleverness. Specificity over buzzwords. Humanity over hype. Those are key branding trends for 2026 and beyond. And that’s good news for those who seek to be real in the virtual world. If your expertise is buried inside a profile behind a login, AI wasn’t designed to connect the dots. Your website, though, gives it dots to connect.

What To Include In A Career-Smart Website

Here’s the good news. Having your own website does not mean you need 20-pages of content and an intricate design with multiple tabs. What you need is brand clarity. At a minimum, include these five elements.

- A clear homepage statement

In plain language, say who you help, what you help with, and why it matters. No mystery. No keyword games. AI prefers direct sentences. - A human About page

Tell your story like a person, not a resume. What life experiences shaped your thinking? What do you believe? What’s your purpose? This is gold for AI and even better for building an emotional connection with fellow humans. - Proof of thinking

Articles, essays, talks, newsletters, or case studies. Original content screams expertise far louder than boring, trite jargon like “results-driven, team-oriented professional.” - A focus area or services page

Be specific about your primary focus area, not all the things you can do. Focus on just those you want to be known for. AI rewards focus, and personal branding is about being known for something, not 100 things. - Demonstration of credibility

Include media mentions, speaking, certifications, notable clients (for brand association), and projects. These help you build trust with both humans and machines.

AI Visibility Best Practices Without The Tech Headache

You don’t need to be an SEO wizard. You just need to be consistent.

- Use the same language across pages. If you help leaders build thought leadership, say it more than once. AI notices patterns.

- Write like you talk. AI models are trained on natural language. Stiff corporate writing actually works against you.

- Update occasionally. Fresh content signals relevance, but you don’t need a blog schedule that takes over your life. One thoughtful, on-brand piece every two to three months will suffice.

- Make authorship obvious. Your name, bio, and perspective should be clear on every piece of content. Anonymous wisdom doesn’t rank, and it won’t get associated with you.

- Connect your site to LinkedIn. Think of LinkedIn as the front porch and your website as the rest of the house.

Your Website Signals A More Modern Career Strategy In The Age Of AI

This isn’t really about websites. It’s about augmenting platform-dependent visibility with owned visibility. You still need to master LinkedIn, but AI is changing how opportunity finds you. Recommendations will increasingly come from synthesis, not SEO or search results. The people who show up will be the ones who make it easy for AI to understand who they are, what they stand for, and why they matter. In other words, get clear on your personal brand!

It’s Time To Build An AI-Friendly Personal Brand Engine

In a world where AI is doing the asking, your website is how you answer before anyone even knows your name. And the rules of working with AI are empowering. It goes beyond trying to game algorithms by having all the right keywords in everything you post. The next era of visibility goes back to the origins of personal branding. It’s about being the real, human you, consistently without apology or hesitation.

Feature image credit: Getty

By William Arruda

Find William Arruda on LinkedIn. Visit William’s website.

William Arruda is a keynote speaker, author, and personal branding pioneer. He speaks on branding, leadership, and AI. Watch his AI-Powered Personal Branding Session to learn more about the intersection of AI and personal branding.